Generating Anki flashcards from WikiData

One of the new diversions I picked up during the pandemic is learning flashcards using spaced repetition. The principle is: new and unfamiliar cards are reviewed frequently, with the review interval decreasing as the card is learned; once learned, cards are shown just often enough to retain them in long-term memory 1. I use an excellent piece of open-source software called Anki that stores and shows flashcards, calculating review intervals automatically 2.

I was intrigued by the idea of a database of flashcards as a ‘’neuroprosthetic’’, or extension of one’s brain 3. Using a spaced repetition system yields the ability to remember much more than an unassisted memory. More prosaically, reviewing flashcards seemed like a good use of periods of time that might otherwise be spent waiting, procrastinating, or mindlessly scrolling — ‘‘Dead time is Anki time’’ 4.

The references cited in this post thoroughly review evidence for the advantages of spaced repetition 13, which I do not discuss here. Instead, this post focuses on a little project prompted by a whim of mine to learn all of the British Prime Ministers (remember the Sporcle quiz? 5). I have been meaning to start adding programming or turbomachinery related flashcards, but thus far this has felt too much like work, and so most of my Anki deck is semi-useful trivia.

My problem was, a deck of British Prime Minister flashcards for Anki did not exist, so I set out to make one. Automatically, using a Python script, of course.

The fount of all knowledge

We can fetch information required for the cards from the WikiData Query Service 6. The WikiData knowledge base is the backend for information boxes seen on the right of Wikipedia pages. Their Query Service ingests a SPARQL query and returns the results as JSON. SPARQL seemed a little alien to me to begin with, but a comprehensive tutorial with many examples is provided and I soon got the hang of it 7.

There is no ‘‘List of British Prime Ministers’’ page on WikiData. Instead, we

ask for all entities for whom P39 Q14211, that is their database entry states

they have held the position (P39) of British Prime Minister (Q14211).

Properties start with P and are general kinds of things (country, P17),

whereas items start with Q and are specific things (UK, Q145). In simple

cases, properties are prefixed by wdt: and items are prefixed by wd:. For

nested statements, things are more complicated. p: refers to the entire

statement, ps: points to the main property of that statement, while pq:

points to qualifying properties such as the term of office.

We have covered enough to look at the SPARQL query that collects the required data:

# Query file to return British PMs by date

SELECT DISTINCT

?personLabel # Name

?start # Start of term

?end # End of term

?preLabel # Name of predecessor

?sucLabel # Name of successor

(SAMPLE(?pic) as ?pic) # Choose one picture

(SAMPLE(?party) as ?party) # Choose one party to deal with floor-crossers

(SAMPLE(?partycol) as ?partycol) # Some parties have changed colour

WHERE {

# Enable English language labels

SERVICE wikibase:label { bd:serviceParam wikibase:language "[AUTO_LANGUAGE],en". }

# Get any position holders, save their picture and party affiliation

?person p:P39 ?posheld; # Store position statement for later examination

wdt:P18 ?pic; # Return picture

wdt:P102 ?partyst. # Return political party

# Check details of the position

?posheld ps:P39 wd:Q14211; # Position must be British PM

pq:P580 ?start. # Get start date of matched position

# Preceeded by, succeeded by, end date

# Optional so as to not rule out Walpole or Boris

OPTIONAL { ?posheld pq:P1365 ?pre }

OPTIONAL { ?posheld pq:P1366 ?suc }

OPTIONAL { ?posheld pq:P582 ?end }

# We have to get party label explicitly because the SAMPLE directive does

# not work with an implicit ?...Label variable name

# rdfs: is a special prefix to get labels manually

?partyst rdfs:label ?party.

FILTER (langMatches( lang(?party), "EN" ) ) # Only the English label

# Extract party colour Hex code

?partyst wdt:P465 ?partycol.

}

GROUP BY ?personLabel ?start ?end ?pcol ?preLabel ?sucLabel

ORDER BY ?start

WikiData provide an example Python script which is very easy to modify to fetch the results using SPARQLWrapper 8.

import sys

import os

from SPARQLWrapper import SPARQLWrapper, JSON

# Set constants

ENDPOINT_URL = "https://query.wikidata.org/sparql" # Where to fetch data

QUERY_FILE = "query.sparql" # Path to a query file

USER_AGENT = "wikidata2anki Python/%s.%s" % ( # Identify ourselves to WikiData

sys.version_info[0],

sys.version_info[1],

)

# Load query

with open(QUERY_FILE, "r") as f:

query_str = f.read()

# Send to remote and get results

# After the WikiData Query Service example script.

sparql = SPARQLWrapper(ENDPOINT_URL, agent=USER_AGENT)

sparql.setQuery(query_str)

sparql.setReturnFormat(JSON)

results = sparql.query().convert()

# Throw away metadata and just keep values

results = results["results"]["bindings"]

results = [{ki: ri[ki]["value"] for ki in ri} for ri in results]

# Print the names

for ri in results:

print(ri["personLabel"])

Running this script returns,

Robert Walpole

Spencer Compton, 1st Earl of Wilmington

...

Theresa May

Boris Johnson

One problem I initially had was that Churchill showed up six times in the list.

He crossed the floor from Conservatives to Liberals, and then went back again.

So he has three party affiliations on record (with different dates), and all of

these showed up for each of his two terms. The SAMPLE directive in the SPARQL

chooses just one of the three party affiliations and fixes this problem.

Stacking the deck

Some more code manipulates the dictionary to format terms of office in year

ranges, then download images using wget and make them a consistent size using

ImageMagick. Finally, we add a contextual note to PMs that had multiple

non-consecutive terms.

# Now loop over results and manipulate the data as required

for ri in results:

# Join start and end dates into a year range field

year_st = int(ri["start"][:4])

try:

year_en = int(ri["end"][:4])

if year_st == year_en:

ri["years"] = "%d" % year_st

else:

ri["years"] = "%d-%d" % (year_st, year_en)

except KeyError:

ri["years"] = "%d-" % year_st

# Generate image filename based on the PM's name

imgname = (

ri["personLabel"].replace(" ", "").split(",")[0]

+ "."

+ ri["pic"].split(".")[-1]

)

# Store image and HTML snippet

ri["imgpath"] = os.path.join(IMGDIR, imgname)

ri["imghtml"] = r'<img src="%s">' % imgname

# Only download and resize if we have not got the image already

if not os.path.exists(ri["imgpath"]):

os.system("wget -nv -O %s %s" % (ri["imgpath"], ri["pic"]))

os.system("convert -resize 360 %s %s" % (ri["imgpath"], ri["imgpath"]))

# Fill in blank predecessor/successors

for lab in ["sucLabel", "preLabel"]:

try:

ri[lab]

except KeyError:

ri[lab] = "N/A"

# For multiple-term PMs, note as such

for ri in results:

others = [

rj for rj in results if rj["personLabel"] == ri["personLabel"] and not ri == rj

]

if len(others) > 0:

ri["others"] = "Also PM: " + ", ".join([rj["years"] for rj in others])

else:

ri["others"] = ""

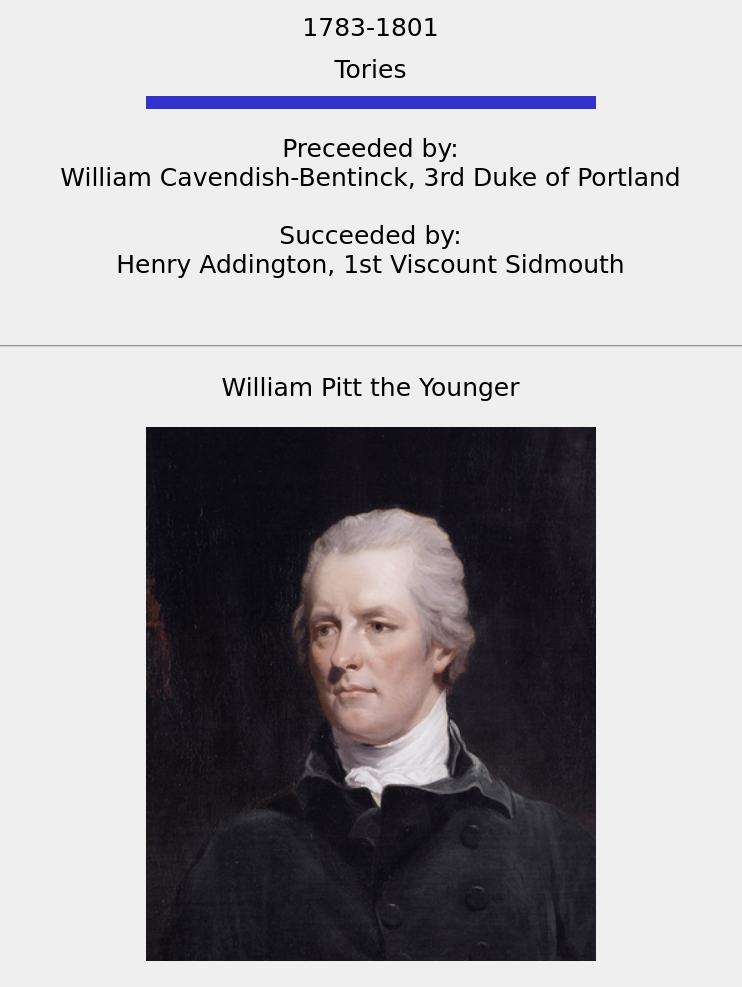

Now we have got the information ready, we can prepare flashcards using another Python library, genanki 9. The rest of the code is mostly boilerplate but it took some time to get the HTML templates for the cards looking slick. The end result is,

The preceded and succeeded fields give more structure to the memory required for a long list, and I was particularly pleased that WikiData store hex codes for party colours I could use directly in the HTML!

The script works equally well for other offices such as the Prime Minister of

Australia (Q319145) or Governor or California (Q887010). In fact, I

modified the script to take the item ID as a command-line argument, an idea

cribbed from a similar script for geography 10.

A possible extension would be to have more query files and card templates, for British monarchs or World Snooker Champions, say. But, premature generality is the thief of time.

Conclusion

I wanted to add British Prime ministers to my spaced repetition flashcard database. So I wrote a Python script that fetches the required information from WikiData using a SPARQL query, then munges the data and generates the cards in Anki format.

My script is available on sourcehut for readers to create decks of their own.